THANK YOU FOR SUBSCRIBING

From Assistant To Deputy: Setting Up An Llm Automation Platform In Corporate Environments

Andreas Kurz, Global Head of Digital Transformation, Alfagomma Group

Andreas Kurz, Global Head of Digital Transformation, Alfagomma Group

Andreas Kurz, Global Head of Digital Transformation, Alfagomma Group

In today’s business landscape, digital transformation is a necessity, and Artificial Intelligence (AI) has emerged as a critical enabler, extending beyond the tech sector to influence all industries. Once the domain of tech giants with substantial resources, AI technologies like Large Language Models (LLMs) and Generative AI (GenAI) are now accessible to businesses of all sizes.

As the pace of AI innovation accelerates and startups enter the market, the adoption of LLMs and GenAI is shifting from an opportunity to a strategic imperative for non-tech companies. However, while the strategic value is recognized, integrating these technologies into business processes presents challenges.

The Shift from Assistant to Deputy- Centered Approach

Currently, many organizations leverage LLMs in an assistant-like role, where the technology supports users by responding to prompts and assisting with tasks. While this approach provides value, it often requires significant human intervention, limiting the efficiency gains that can be achieved.

To truly transform business operations, a deputycentered approach is needed. LLMs and GenAI take on a more autonomous role in this model, managing end-to-end processes with minimal human oversight. The technology acts as a deputy, executing tasks independently and only requiring human intervention for exceptional cases. This approach streamlines operations and enables the automation of more complex workflows previously beyond reach.

Challenges in Operationalizing a Deputy- Centered Approach

While the potential benefits of a deputy-centered approach are clear, several challenges must be addressed. Data protection, ethical AI usage, and operational costs are significant concerns. Building custom interfaces that ensure secure, optimized use of LLMs in a deputy capacity can also be complex and resource-intensive.

This article outlines a scalable architecture designed to securely integrate LLMs and GenAI into legacy systems, enabling organizations to transition from assistant to deputy-centered operations with minimal investment.

Designing the LLM/GenAI Platform for Deputy

Operations The platform must be designed with autonomy and scalability in mind to support a deputy-centered approach.

A typical interaction with an LLM in this model involves:

1. Business Event: Triggered by a document or data receipt.

2. Pre-Processing: Automated digitization and preparation of documents.

3. Submission to LLM: Sending digitized data with predefined prompts to the model.

4. Post-Processing: Autonomous management and utilization of the LLM’s output.

This workflow enables LLMs to operate with a high degree of independence, applying the same process to various use cases, such as invoice processing, CV analysis, and contract review. The architecture must support scalability and autonomy by incorporating the following principles:

• Microservices & Containerization: Each process step is a self-contained microservice, enabling modularity and independent operation.

• HTTP & REST APIs: Standardized, secure communication protocols that allow flexible integration.

• Serverless Architecture: Services are deployed on demand, reducing costs and enabling dynamic scaling.

• Event-Driven Design: Process steps are triggered by specific business events, allowing the LLM to act autonomously.

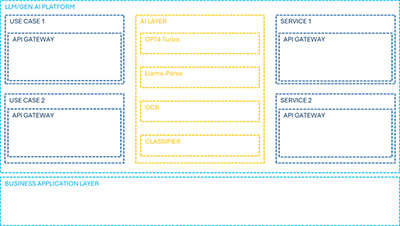

LLM/GenAI Platform Architecture

The architecture described in Figure 1 facilitates the transition to a deputy-centered approach by ensuring that business applications trigger events handled by autonomous microservices deployed as APIs. These services manage pre-processing, prompting, and post-processing tasks with minimal human intervention. The AI layer operates as an independent microservice, with LLMs deployed in a secure environment accessed via APIs. This setup enables the LLM to act as a true deputy, executing tasks autonomously across various business processes.

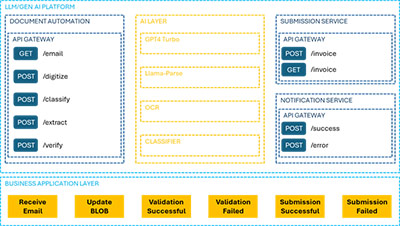

Practical Application: Document Automation and Accounts Payable

In the context of document automation, the process begins with an email trigger. Autonomous microservices handle preprocessing, digitization, classification, and data extraction, validating and storing results automatically. The system independently manages the workflow, integrating extracted data into legacy systems such as an ERP. This practical application demonstrates the LLM's ability to function as a deputy, managing complex tasks from start to finish (Figure 2).

Benefits of a Deputy-Centered LLM/GenAI Platform

1. Service Encapsulation: Modular microservices enable scalable, flexible development.

2. No Model Training: LLMs allow prompt-based optimization, eliminating the need for continuous retraining.

3. Deputy-Like Autonomy: Full automation of specified use cases with minimal human oversight, integrating results seamlessly into legacy systems.

4. Controlled AI Usage: The architecture ensures secure, predefined use of LLMs, with optimized prompts and secure API gateways.

5. Scalability and Flexibility: A serverless, modular design supports pay-per-use models, rapid development, and easy integration of new use cases.

Outlook

As LLM capabilities continue to advance, the deputy-centered approach will unlock even more potential applications. With the cost of processing decreasing and the complexity of tasks that can be automated increasing, deploying an LLM/ GenAI platform as described ensures that organizations are well-positioned to integrate future use cases efficiently, with minimal cost and time investment.

Weekly Brief

I agree We use cookies on this website to enhance your user experience. By clicking any link on this page you are giving your consent for us to set cookies. More info

Read Also

Where Technology Meets Tradition in Sports

Why Software Delivery Centres Fail In Insurance

Building Smarter Content Systems for Scalable Growth

The Thoughtful Innovation behind Every Loaf

Lessons for Ambitious Professionals in a Digital World

Designing For Regeneration, Not Just Resilience

Listening Beyond Hearing

Modernizing Lending Through Innovative, Secure and Scalable Technology